Projects

Small applications I built in my free time to play around with new technology

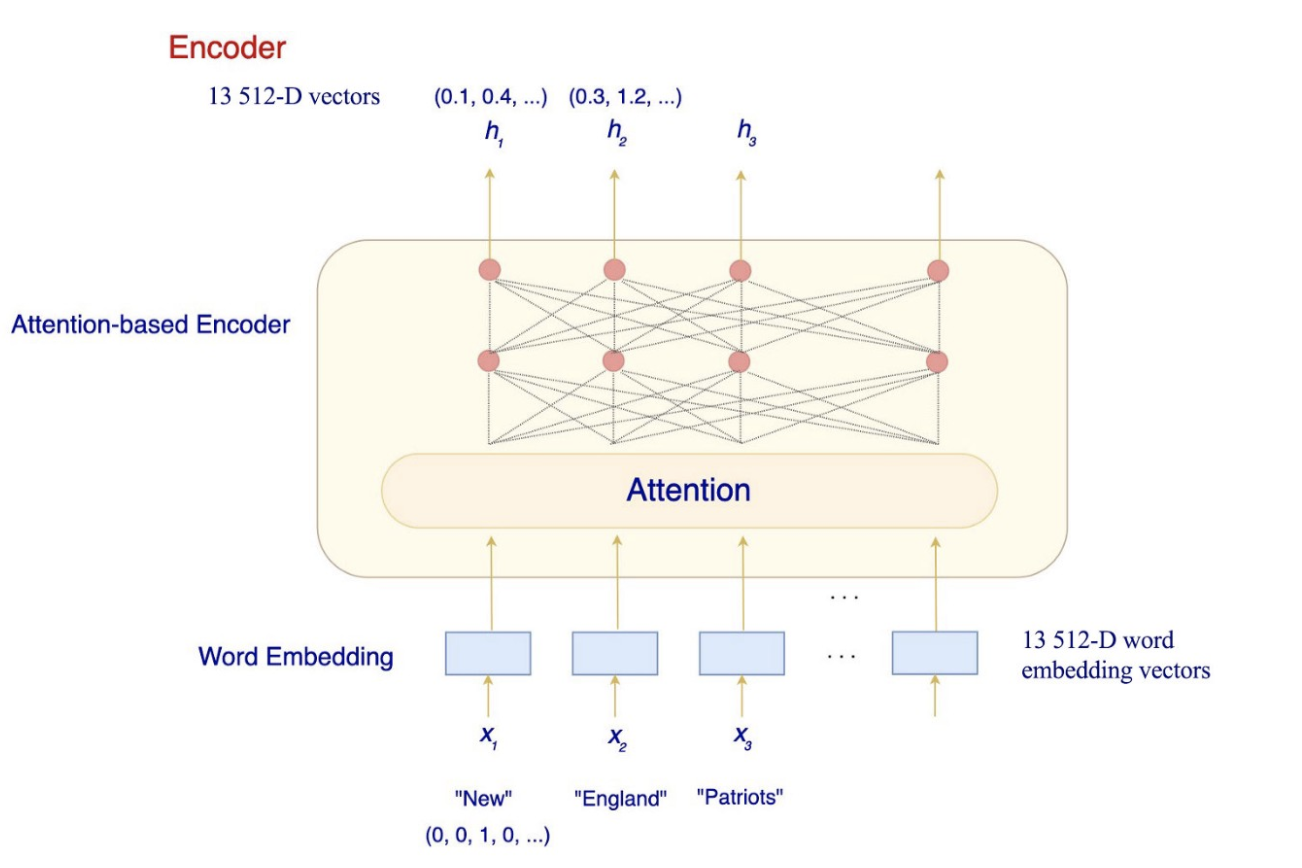

Using BERT for multi-class classification

Transfomers were the first to demonstrate transfer learning for natural language tasks, which, till then had been limited to some areas of computer vision. Here, I attempt to train a BERT network on just over 6000 training examples on the task of identifying the industry to which a company belongs based on a textual description of its business. View on Colab

Hosted or cmd-line

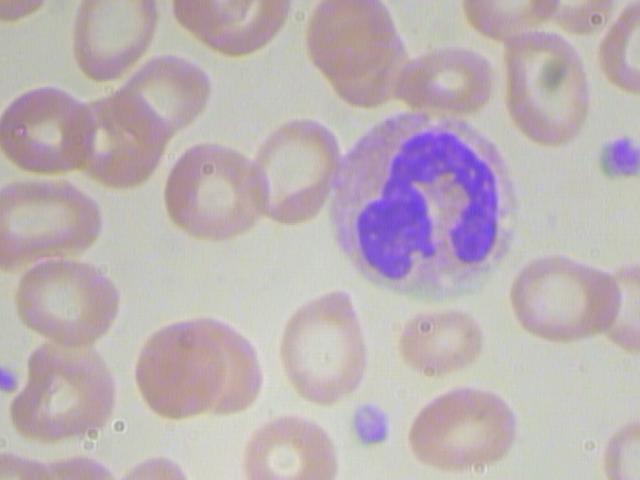

Training U-Nets to segment RBCs

U-nets resemble the encoder-decoder architecture so often employed in language networks. They take the input image reduce it to a concise representation and then elaborate it to arrive at the desired transformed image. Here, I put them to use to generate masks to segment nuclei lobes of Red Blood Cells. View on Colab

Something around HN’s public API would also do. Summarizing most upvoted answers from HN perhaps? If not something ML, some really simple utility - linting for all text files on your blog. Yes, any grammarly or other bot applied to all blog posts - pointing out most egregious errors? Or choose any 5, or 4 even would d

Inspecting BERT

This started out as a way to investigate why I failed to make true transfer learning work on BERT and led me to study explainability and interpretability for transformers more generally. View on Colab

After I do the full fast.ai course.

Hosted or cmd-line

Scheme Interpreter in Haskell

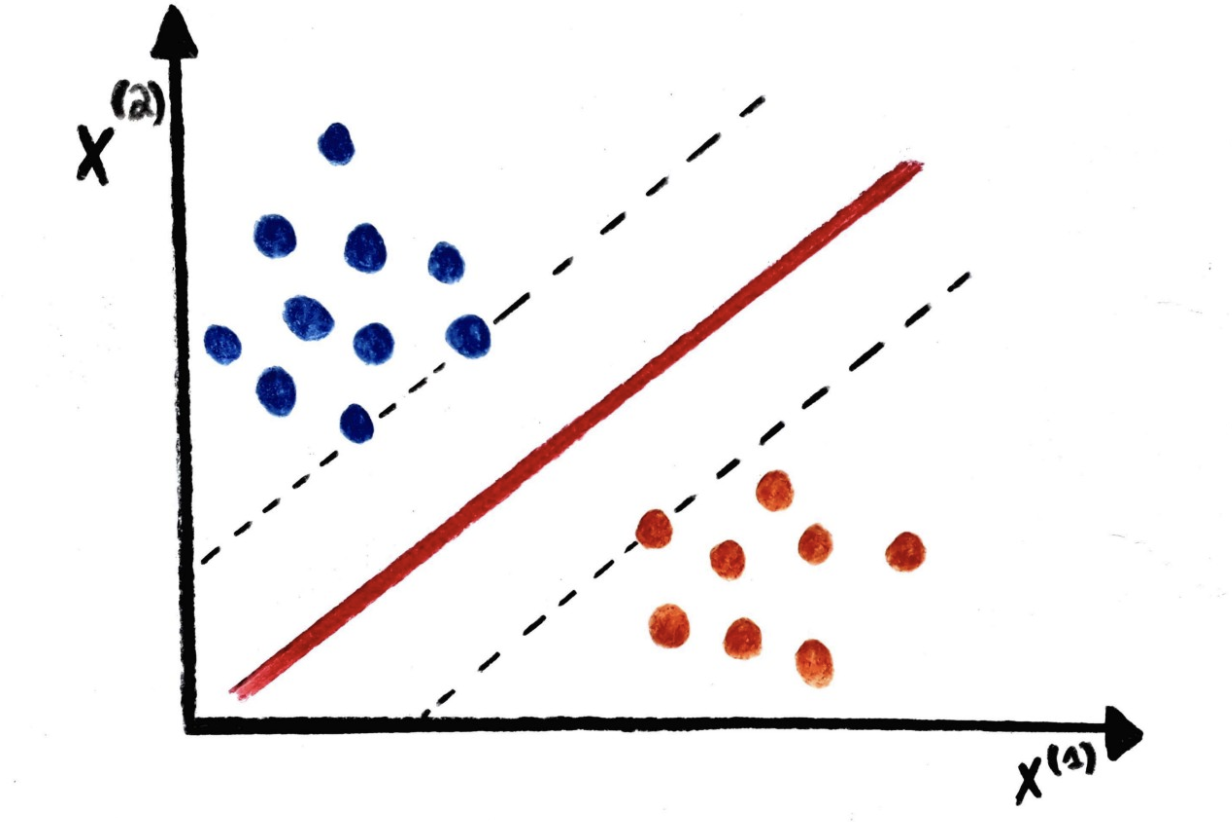

How do you classify long sequences into multiple classes? Naive Bag of Words models cannot do semantic modelling - synonyms are treated as separate words. Also, the document vectors they output balloon in size very quickly. I use TF-IDF and word2vec together to arrive at rich document vectors that can be classified by SVMs. View on Colab

node2vec, random walks, core numbers

The idea behind word2vec - words that occur in the same neighbourhood are similar in meaning or context - can be extended to associative networks as well. Suitably parameterized random walks can help discover a highly representative neighbourhood or context for a node. View on GitHub

something along the lines of Joel Grus maybe? Or Hasktorch or Autograd or something?

ResNets and transfer learning

Pre-trained convnets can be used as fixed feature extractors - detecting edges and shapes in the image. This is more efficient than starting from a clean slate because we don’t have to reinvent the wheel. ResNets trained on the ImageNet database are a superb example of this idea - I use them to achieve very high accuracy on a small, difficult dataset. View on Colab